- Green versionCheck

- Green versionCheck

- Green versionCheck

- Green versionCheck

Jisouke's web crawler tool can collect web page text, pictures, tables, hyperlinks and other web page elements. It can capture web page data with no limit on depth and breadth. It can be visualized without programming. The web page content can be harvested after it is visible, allowing you to easily handle web pages. Data, use this data to find potential customers, conduct data research, explore business opportunities, etc., allowing you to play with big data as you like. It is a must-have tool for students, webmasters, e-commerce companies, researchers, HR...

Software features

The web is like a large database, which contains all kinds of valuable information. When you need to collect certain specific information, you may often face the following dilemma:

If you have not systematically learned programming languages such as Python, Ruby, PHP, Perl, Javascript, and java, it is too difficult to implement data collection by writing code.

Although there are many web crawlers and web scraping software, they are difficult to learn and difficult for beginners to get started.

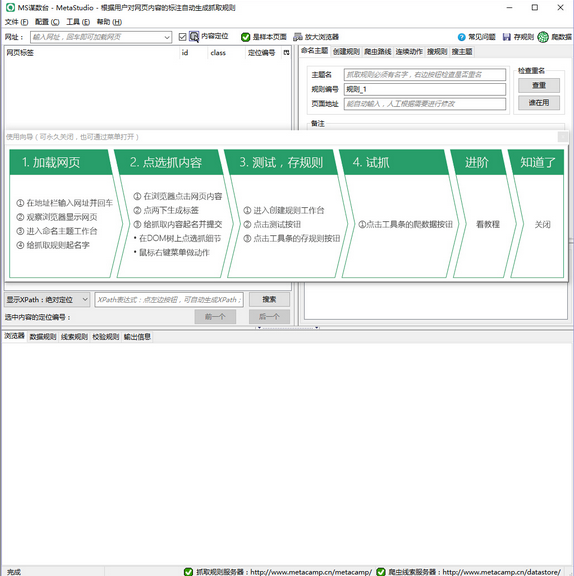

GooSeeker grows together with "technical noobs". Adhering to this purpose, Jisouke GooSeeker crawling software is simple to operate and completely visual. It does not require programming skills and can be easily mastered if you are familiar with computer operations:

When defining the collection rules, use mouse clicks to tell the Jisouke software what content to crawl. The system will automatically generate the crawling rules immediately. The web crawler's workflow program will automatically adapt according to the characteristics of the web page. Dragging and editing acquisition streams are redundant;

When the program is collecting, Jisouke's high simulation simulates real-person operations, which can automatically log in, enter query conditions, click links, click buttons, etc. It can also automatically move the mouse, automatically change the focus, and avoid robots to judge the program;

What you see is what you get during the entire collection process, and the traversed link information, crawling result information, error information, etc. will be reflected in the software interface in a timely manner. Make your entire operation clear and complete your tasks with a relaxed mood.

Template resource application

GooSeeker's template resource application feature allows you to obtain data easily and quickly.

In the Jisouke resource library, crawling rules are stored in different categories. Available crawling rules can be searched through keywords or target page URLs. On the details page of the crawling rule, you can carefully examine whether the crawling results of a rule meet your needs. If so, just click the "Download" button to start the Jisouke web crawler in the member center with one click. Get the data you want. for example:

Rules for crawling prices and reviews on e-commerce websites

Crawl rules for contacts and contact numbers on B2B websites

Rules for capturing web content such as messages, topics, interests, and activities on Weibo

Save yourself the trouble of defining your own crawling rules and use the published rules just like applying the web page template directly. For beginners or business goal-oriented users, template resource application is a shortcut.

Universal web crawler

Compared with other web crawlers, GooSeeker web crawler is far superior in terms of ease of use. Coupled with the unique function of starting the web crawler with one click and the support of the entire [resource sharing platform], it has greatly reduced the burden on users. technical basic conditions. However, web crawling is a technical job after all, and it requires proper mastery of basic knowledge such as HTML. In other words, it takes some time to learn how to use this software. Now that some investment has been made (even in terms of time), the versatility of the web crawler is very important.

Jisouke web crawler has 8 years of experience in the industry and uses the powerful Firefox browser core. What you see is what you get. Many dynamic contents do not appear in HTML documents, but are loaded dynamically, which does not affect the accurate crawling of them. Moreover, there is no need for a network sniffer to analyze network communication messages from the bottom layer, and the crawling rules can be defined visually just like crawling static web pages. Coupled with the developer interface, it can simulate very complex mouse and keyboard movements and capture while moving.

The crawling scope can be summarized into the following categories:

Various website types: news, forums, e-commerce, social networking sites, industry information, financial websites, corporate portals, government websites and other websites can be crawled;

Various web page types: server-side dynamic pages, browser-side dynamic pages (AJAX content), and static pages can all be captured. It can even capture endless waterfall flow pages, web QQ session processes, etc. Jisouke crawler can crawl AJAX/Javascript dynamic pages, server dynamic pages and other dynamic pages by default without any other settings; it can even automatically scroll to crawl dynamically loaded content.

Like PC websites, mobile websites can be crawled: crawlers can simulate mobile agents;

All languages and texts: no special settings are required, all language encodings are automatically supported, and international languages are treated equally;

It can be seen that by using Jisouke web crawler, the entire Internet becomes your database!

Members help each other to capture

This is a special case of parallel crawling by a crawler group. Using this feature, you can quickly aggregate massive amounts of data at low cost. The scene is described as follows:

When you want to capture data in large quantities quickly or frequently, multiple computers are needed from the perspective of data volume, and your own computer is not enough.

Time is tight, so the density of collection activities is very high. For example, if you collect many messages from Weibo in one second and only use your own computer, it will be easily blocked by the target website.

The target website has strict limits on the amount of collection, for example, grabbing air ticket prices

You need to log in before crawling. You need to log in with a large number of accounts at the same time.

Then, you can create a working group and invite netizens to join. In order to get more responses from members, you can send "red envelopes", and the social friends who accept the task will use their computers to help you share the data collection. In the community, others will help you collect data. Of course, you can also help community members capture data and earn more points. When there are tasks later, you can reward the points to community members.

Please pay attention to the following during use:

Publish: You can publish it in the community circle. When publishing, select the bounty type, bounty points, and time limit. After the bounty is published, it cannot be deleted or edited.

Reply: refers to replying and providing help with bounty tasks

No limit to depth, no limit to breadth

When collecting data from websites, especially when collecting large websites, the collected data are often located on web pages at different levels of the website, which greatly increases the difficulty of data collection by web crawlers. Comprehensive web crawlers like Baidu or Google can automatically manage the depth and breadth of crawling. What we are discussing here is focused on web crawlers, hoping to obtain data at the lowest possible cost, and hoping to obtain only the required web content. The so-called focus mainly includes two aspects:

The crawled web pages (regardless of depth or breadth) are planned in advance, unlike comprehensive web crawlers that automatically discover new clues that develop into depth and breadth. It can be seen that crawling within a controlled range will inevitably reduce costs.

The content crawled from the web page is also predefined, which is the so-called crawling rule. Unlike comprehensive web crawlers that capture the entire text content of a web page. It can be seen that precise crawling can be used for data mining and intelligence analysis because the noise has been accurately filtered out.

GooSeeker is such a focused web crawler, but it is different from other collectors on the market:

Jisouke does not set limits on the depth and breadth of the website, allowing you to plan it as you wish. Jisouke wants to be a purely open platform for big data capabilities, and will not hide this capability in a paid version.

Jisouke has no limit on the number of collections and will not deduct points or fees based on time or the number of web pages. You can download the entire Internet.

FAQ

Recently, Jisouke Technical Support Center received some360 Security GuardAccording to user feedback, during the installation and use of Jisouke, some problems were encountered due to false positives from 360, such as server connection failure, individual files being deleted, and warning messages from 360 constantly appearing during the installation process. These problems have caused trouble to some users and affected their normal data acquisition. This article gives countermeasures and attaches the test report of Jisouke by a third-party testing agency.

it works

it works

it works