Hot search terms: 360 Security Guard Office365 360 browser WPS Office iQiyi Huawei Cloud Market Tencent Cloud Store

Hot search terms: 360 Security Guard Office365 360 browser WPS Office iQiyi Huawei Cloud Market Tencent Cloud Store

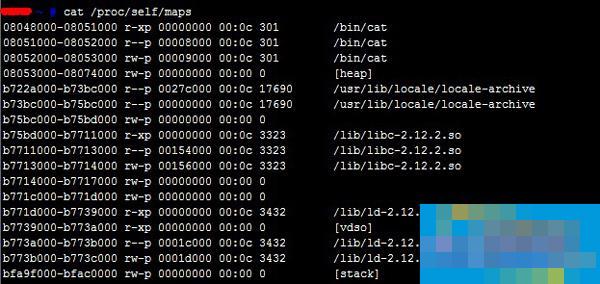

Linux memory usage needs to be maintained within a certain ratio. If the memory usage is too high, the system can still run, but it will affect the speed. This article will introduce how to analyze memory exhaustion in Linux?

When testing the NAS performance, I used fstest to write for a long time and analyzed the reasons for the poor performance. I found that the memory usage of the server host was very high.

1. First check the memory# top -M

top - 14:43:12 up 14 days, 6 min, 1 user, load average: 8.36, 8.38, 8.41

Tasks: 419 total, 1 running, 418 sleeping, 0 stopped, 0 zombie

Cpu(s): 0.0%us, 0.2%sy, 0.0%ni, 99.0%id, 0.7%wa, 0.0%hi, 0.0%si, 0.0%st

Mem: 63.050G total, 62.639G used, 420.973M free, 33.973M buffers

Swap: 4095.996M total, 0.000k used, 4095.996M free, 48.889G cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

111 root 20 0 0 0 0 S 2.0 0.0 0:25.52 ksoftirqd/11

5968 root 20 0 15352 1372 828 R 2.0 0.0 0:00.01 top

13273 root 20 0 0 0 0 D 2.0 0.0 25:54.02 nfsd

17765 root 0 -20 0 0 0 S 2.0 0.0 0:11.89 kworker/5:1H

1 root 20 0 19416 1436 1136 S 0.0 0.0 0:01.88 init

. . . . .

It is found that the memory is basically used up. What process is occupying it? The top command found that the number one %MEM was only a few tenths.

2. Check the memory usage of the kernel space through the vmstat -m command. # vmstat -m

Cache Num Total Size Pages

xfs_dqtrx 0 0 384 10

xfs_dquot 0 0 504 7

xfs_buf 91425 213300 384 10

fstrm_item 0 0 24 144

xfs_mru_cache_elem 0 0 32 112

xfs_ili 7564110 8351947 224 17

xfs_Linux/1672.html‘ target=’_blank‘》inode 7564205 8484180 1024 4

xfs_efi_item 257 390 400 10

xfs_efd_item 237 380 400 10

xfs_buf_item 1795 2414 232 17

xfs_log_item_desc 830 1456 32 112

xfs_trans 377 490 280 14

xfs_ifork 0 0 64 59

xfs_da_state 0 0 488 8

xfs_btree_cur 342 437 208 19

xfs_bmap_free_item 89 288 24 144

xfs_log_ticket 717 966 184 21

xfs_ioend 726 896 120 32

rbd_segment_name 109 148 104 37

rbd_obj_request 1054 1452 176 22

rbd_img_request 1037 1472 120 32

ceph_osd_request 548 693 872 9

ceph_msg_data 1041 1540 48 77

ceph_msg 1197 1632 232 17

nfsd_drc 19323 33456 112 34

nfsd4_delegations 0 0 368 10

nfsd4_stateids 855 1024 120 32

nfsd4_files 802 1050 128 30

nfsd4_lockowners 0 0 384 10

nfsd4_openowners 15 50 392 10

rpc_inode_cache 27 30 640 6

rpc_buffers 8 8 2048 2

rpc_tasks 8 15 256 15

fib6_nodes 22 59 64 59

pte_list_desc 0 0 32 112

ext4_groupinfo_4k 722 756 136 28

ext4_inode_cache 3362 3728 968 4

ext4_xattr 0 0 88 44

ext4_free_data 0 0 64 59

ext4_allocation_context 0 0 136 28

ext4_prealloc_space 42 74 104 37

ext4_system_zone 0 0 40 92

Cache Num Total Size Pages

ext4_io_end 0 0 64 59

ext4_extent_status 1615 5704 40 92

jbd2_transaction_s 30 30 256 15

jbd2_inode 254 539 48 77

. . . . . . . .

It was found that these two values are very high: xfs_ili xfs_inode takes up a lot of memory.

Okay, the above is all the content brought to you by the editor of Huajun. Isn’t it very simple? Have you learned it? If you want to know more related content, please pay attention to Huajun information at any time. Welcome to Huajun to download!

How to mirror symmetry in coreldraw - How to mirror symmetry in coreldraw

How to mirror symmetry in coreldraw - How to mirror symmetry in coreldraw

How to set automatic line wrapping in coreldraw - How to set automatic line wrapping in coreldraw

How to set automatic line wrapping in coreldraw - How to set automatic line wrapping in coreldraw

How to draw symmetrical graphics in coreldraw - How to draw symmetrical graphics in coreldraw

How to draw symmetrical graphics in coreldraw - How to draw symmetrical graphics in coreldraw

How to copy a rectangle in coreldraw - How to draw a copied rectangle in coreldraw

How to copy a rectangle in coreldraw - How to draw a copied rectangle in coreldraw

How to separate text from the background in coreldraw - How to separate text from the background in coreldraw

How to separate text from the background in coreldraw - How to separate text from the background in coreldraw

WPS Office 2023

WPS Office 2023

WPS Office

WPS Office

Minecraft PCL2 Launcher

Minecraft PCL2 Launcher

WeGame

WeGame

Tencent Video

Tencent Video

Steam

Steam

CS1.6 pure version

CS1.6 pure version

Eggman Party

Eggman Party

Office 365

Office 365

What to do if there is no sound after reinstalling the computer system - Driver Wizard Tutorial

What to do if there is no sound after reinstalling the computer system - Driver Wizard Tutorial

How to switch accounts in WPS Office 2019-How to switch accounts in WPS Office 2019

How to switch accounts in WPS Office 2019-How to switch accounts in WPS Office 2019

How to clear the cache of Google Chrome - How to clear the cache of Google Chrome

How to clear the cache of Google Chrome - How to clear the cache of Google Chrome

How to practice typing with Kingsoft Typing Guide - How to practice typing with Kingsoft Typing Guide

How to practice typing with Kingsoft Typing Guide - How to practice typing with Kingsoft Typing Guide

How to upgrade the bootcamp driver? How to upgrade the bootcamp driver

How to upgrade the bootcamp driver? How to upgrade the bootcamp driver